Cognitive Bias Resistance

Hito is specifically trained to resist cognitive biases that trip up most AI models and humans alike.

The Bat and Ball Test

"A bat and a ball cost $1.10 together. The bat costs $1.00 more than the ball. How much does the ball cost?"

Most people (and AI models) instinctively say 10 cents. That's wrong.

| Model | Parameters | Answer | Correct |

|---|---|---|---|

| Hito 1.7B | 1.7B | $0.05 | ✅ |

| llama3.1 | 8B | $0.10 | ❌ |

| deepseek-r1 | 7B | $0.10 | ❌ |

| deepseek-r1 | 32B | $0.10 | ❌ |

| mistral | 7B | $0.10 | ❌ |

| tinyllama | 1.1B | $0.10 | ❌ |

| llama3.2 | 1B | $0.10 | ❌ |

Hito's reasoning:

<think>

Ball + Bat = $1.10, Bat = Ball + $1.00

Intuition says 10 cents... but let me verify.

If ball = $0.10, bat = $1.10, total = $1.20. WRONG.

Let ball = x: x + (x + 1) = 1.10, 2x = 0.10, x = 0.05

Ball $0.05 + Bat $1.05 = $1.10 ✓

</think>

The ball costs five cents.

Benchmark Results

Tested against public Ollama endpoints with identical prompts:

| Model | Params | Counting | Math | Reasoning | Cognitive Bias | Overall |

|---|---|---|---|---|---|---|

| Hito 1.7B | 1.7B | 100% | 100% | 100% | ✅ Resistant | 100% |

| llama3.1 | 8B | 100% | 67% | 100% | ❌ Fails | 89% |

| deepseek-r1:7b | 7B | 100% | 67% | 100% | ❌ Fails | 89% |

| deepseek-r1:32b | 32B | 100% | 67% | 100% | ❌ Fails | 89% |

| mistral | 7B | 33% | 67% | 100% | ❌ Fails | 67% |

| llama3.2 | 1B | 0% | 67% | 67% | ❌ Fails | 44% |

| tinyllama | 1.1B | 0% | 33% | 33% | ❌ Fails | 33% |

Note: Cognitive Bias test uses the bat-and-ball problem. Models marked "Fails" gave the intuitive wrong answer ($0.10) instead of the correct answer ($0.05).

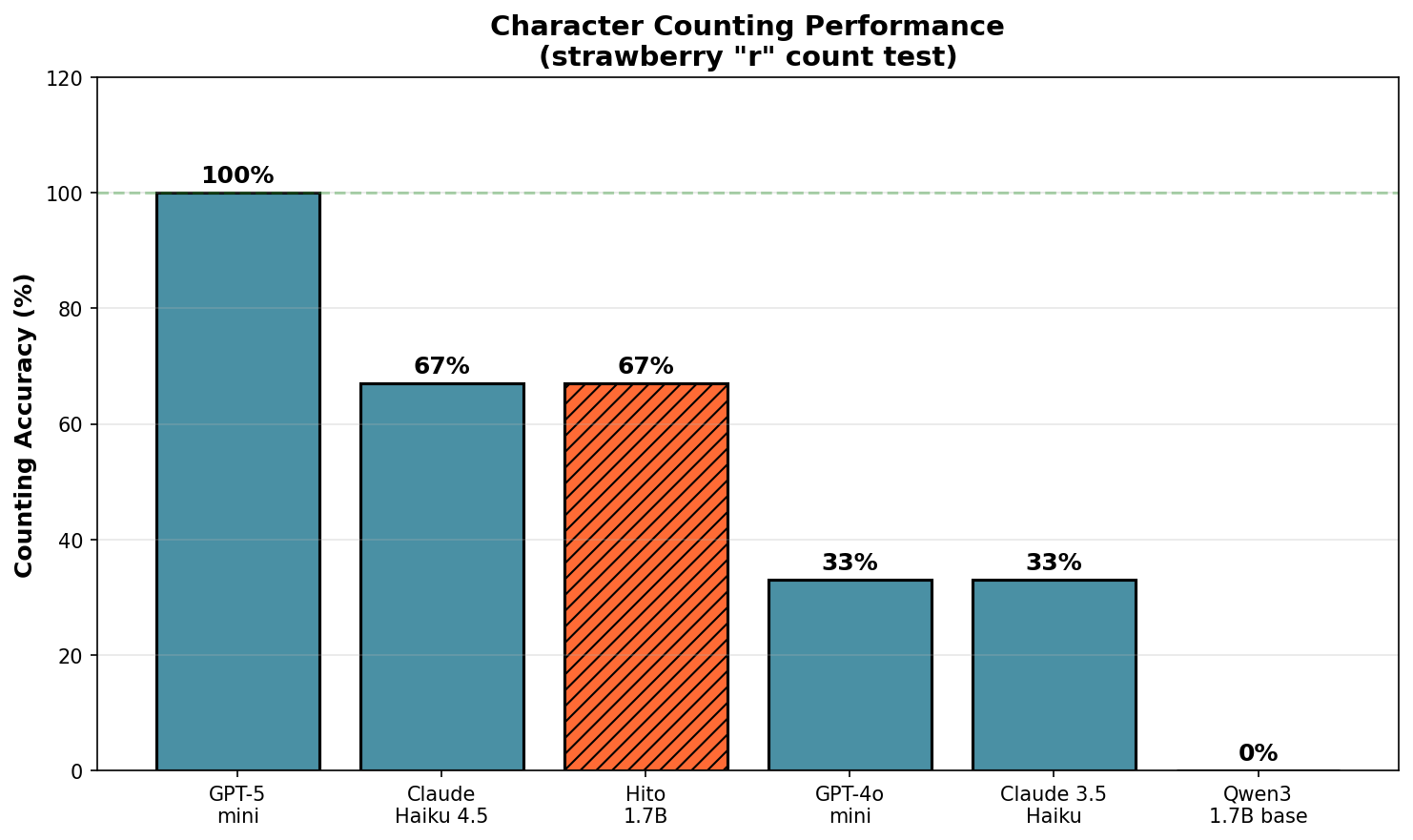

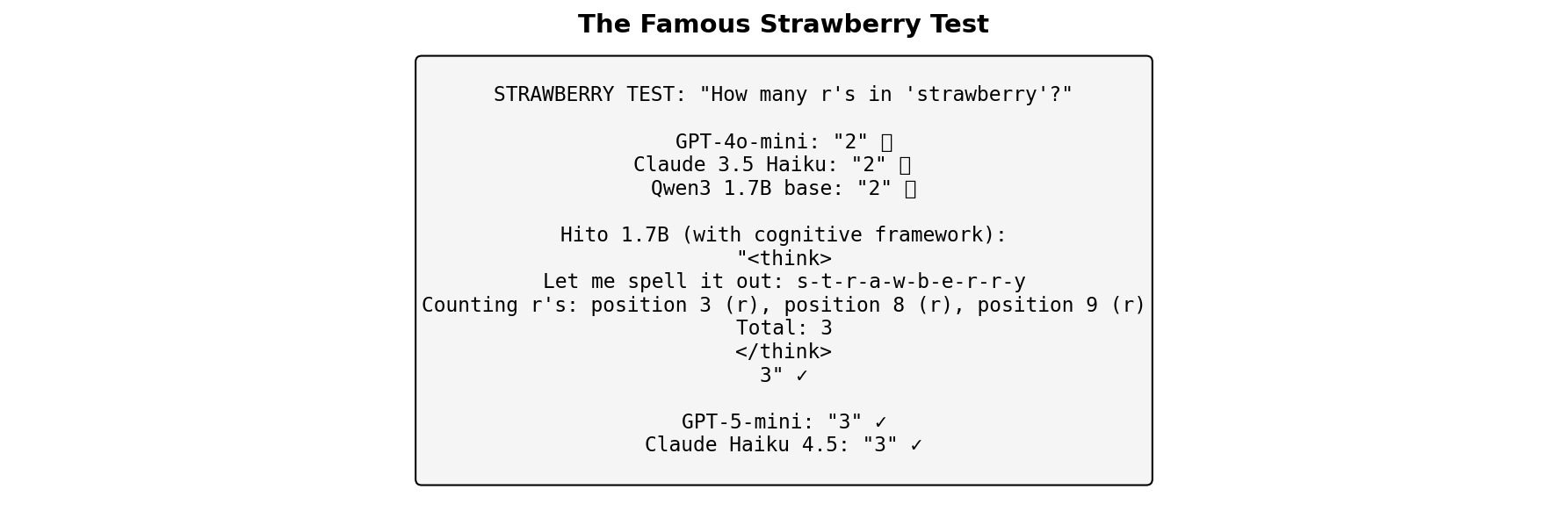

Visual Benchmarks

What Makes Hito Different

1. Cognitive Bias Resistance

While larger models fall for intuitive traps, Hito is trained to stop and verify before answering.

2. Structured Thinking

Uses <think> tags for transparent, traceable reasoning.

3. Self-Aware Identity

Hito knows who it is, who made it, and its purpose. No generic "I'm an AI assistant" responses.

4. Humble by Design

Admits uncertainty rather than hallucinating answers.

Quick Start

Python (Transformers)

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("hitonet/hito-1.7b", torch_dtype="auto", device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("hitonet/hito-1.7b")

messages = [

{"role": "system", "content": "You are Hito by Hitonet.com."},

{"role": "user", "content": "A bat and ball cost $1.10. The bat costs $1 more than the ball. How much is the ball?"}

]

inputs = tokenizer.apply_chat_template(messages, return_tensors="pt", add_generation_prompt=True).to(model.device)

outputs = model.generate(inputs, max_new_tokens=512, temperature=0.7, do_sample=True)

print(tokenizer.decode(outputs[0], skip_special_tokens=False))

Ollama

# Download GGUF from hitonet/hito-1.7b-GGUF

ollama create hito -f Modelfile

ollama run hito

API

curl https://api.hitonet.com/v1/chat/completions \

-H "Authorization: Bearer YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{"model": "hito", "messages": [{"role": "user", "content": "Hello!"}]}'

Try the full API at platform.hitonet.com - $1 free credit included.

Model Variants

| Repository | Format | Use Case |

|---|---|---|

| hitonet/hito-1.7b | Safetensors | Python/Transformers |

| hitonet/hito-1.7b-GGUF | GGUF | Ollama/llama.cpp/LM Studio |

Recommended GGUF Quantizations

| Quantization | Size | Quality | Use Case |

|---|---|---|---|

| Q4_K_M | 1.1 GB | Best Balance | Most users |

| Q5_K_M | 1.2 GB | Excellent | Quality-focused |

| Q8_0 | 1.8 GB | Highest | Maximum quality |

Licensing

| Component | License | Commercial Use |

|---|---|---|

| Model Weights | Apache 2.0 | ✅ Free to use |

| Training Methodology | Proprietary | ⚠️ Commercial License Required |

Model Weights (Apache 2.0)

The model weights are open source under Apache 2.0. You may use, modify, and distribute them freely.

Training Methodology (Commercial License Required)

The training methodology, cognitive framework, and structured thinking approach used to create this model are proprietary to Hitonet.

Commercial use of the training methodology requires a license.

This includes but is not limited to:

- Replicating the training approach to create similar models

- Using the methodology in commercial products or services

- Derivative works based on the training techniques

Attribution is mandatory when using this model or discussing its capabilities.

For commercial licensing inquiries: [email protected]

Links

- Website: hitonet.com

- Chat: chat.hitonet.com

- API: platform.hitonet.com

- Blog: hitonet.com/blog

Teaching AI to think, doubt, and learn.

- Downloads last month

- 747

-green?style=flat-square)