Commit

·

1c8d125

1

Parent(s):

c02fe3a

initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

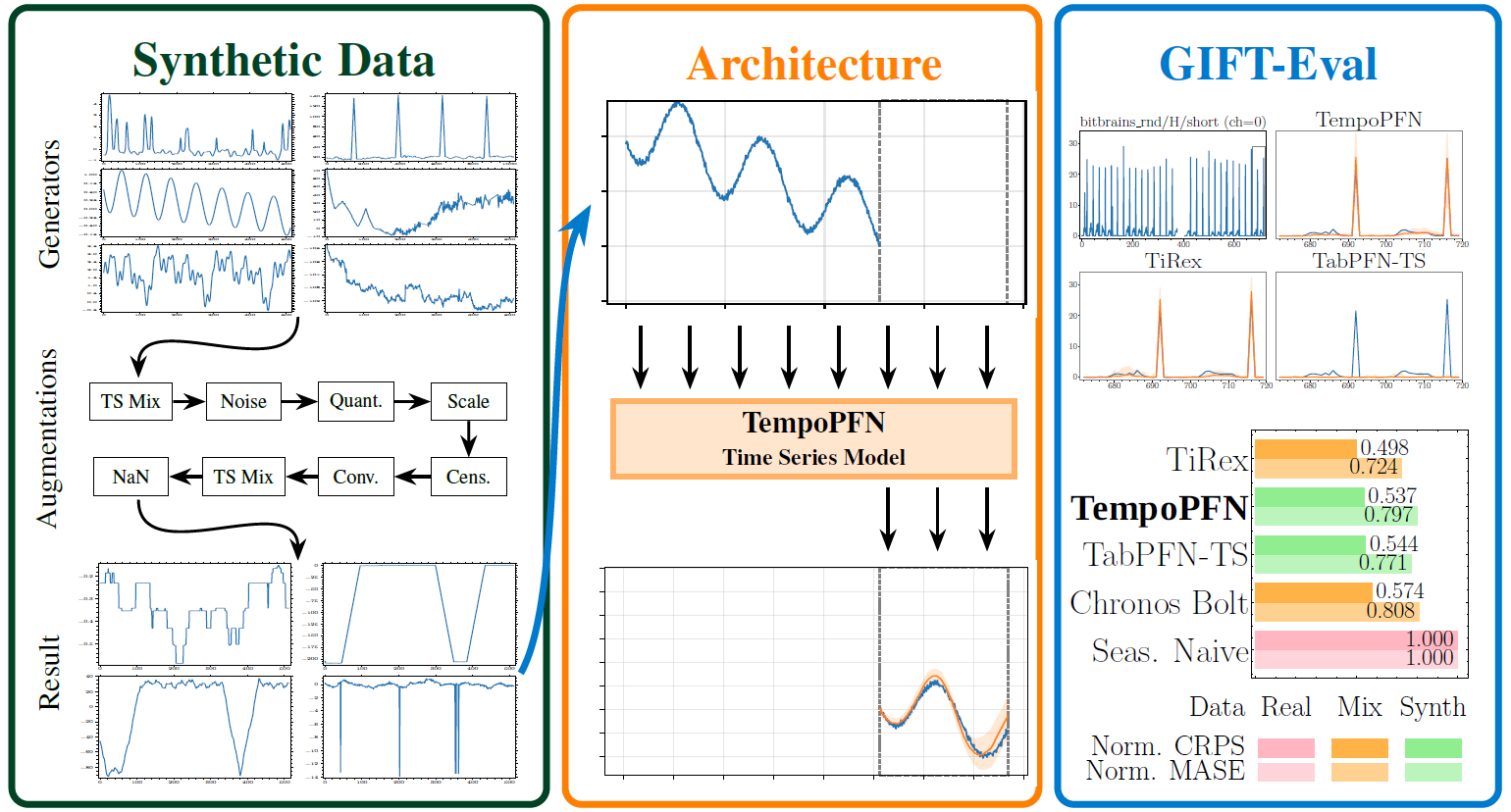

See raw diff

- .gitignore +167 -0

- README.md +128 -2

- configs/example.yaml +118 -0

- data/nan_stats.json +0 -0

- examples/generate_synthetic_data.py +324 -0

- examples/quick_start_tempo_pfn.ipynb +286 -0

- examples/quick_start_tempo_pfn.py +95 -0

- examples/utils.py +115 -0

- gift_eval/submission/all_results.csv +98 -0

- gift_eval/submission/config.json +6 -0

- pyproject.toml +57 -0

- src/__init__.py +0 -0

- src/data/__init__.py +0 -0

- src/data/augmentations.py +1318 -0

- src/data/batch_composer.py +705 -0

- src/data/constants.py +25 -0

- src/data/containers.py +272 -0

- src/data/datasets.py +267 -0

- src/data/filter.py +73 -0

- src/data/frequency.py +538 -0

- src/data/loaders.py +661 -0

- src/data/scalers.py +360 -0

- src/data/time_features.py +564 -0

- src/data/utils.py +75 -0

- src/gift_eval/__init__.py +0 -0

- src/gift_eval/aggregate_results.py +160 -0

- src/gift_eval/constants.py +83 -0

- src/gift_eval/data.py +234 -0

- src/gift_eval/dataset_properties.json +152 -0

- src/gift_eval/evaluate.py +529 -0

- src/gift_eval/model_wrapper.py +349 -0

- src/models/__init__.py +0 -0

- src/models/blocks.py +58 -0

- src/models/model.py +427 -0

- src/optim/lr_scheduler.py +360 -0

- src/plotting/__init__.py +0 -0

- src/plotting/gift_eval_utils.py +215 -0

- src/plotting/plot_timeseries.py +292 -0

- src/synthetic_generation/__init__.py +0 -0

- src/synthetic_generation/abstract_classes.py +97 -0

- src/synthetic_generation/anomalies/anomaly_generator.py +293 -0

- src/synthetic_generation/anomalies/anomaly_generator_wrapper.py +64 -0

- src/synthetic_generation/audio_generators/financial_volatility_generator.py +103 -0

- src/synthetic_generation/audio_generators/financial_volatility_wrapper.py +91 -0

- src/synthetic_generation/audio_generators/multi_scale_fractal_generator.py +75 -0

- src/synthetic_generation/audio_generators/multi_scale_fractal_wrapper.py +77 -0

- src/synthetic_generation/audio_generators/network_topology_generator.py +113 -0

- src/synthetic_generation/audio_generators/network_topology_wrapper.py +93 -0

- src/synthetic_generation/audio_generators/stochastic_rhythm_generator.py +86 -0

- src/synthetic_generation/audio_generators/stochastic_rhythm_wrapper.py +81 -0

.gitignore

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

logs/

|

| 2 |

+

*.png

|

| 3 |

+

*.pth

|

| 4 |

+

# *.sh

|

| 5 |

+

*.slurm

|

| 6 |

+

*.pkl

|

| 7 |

+

|

| 8 |

+

wandb/

|

| 9 |

+

AutogluonModels/

|

| 10 |

+

.vscode/

|

| 11 |

+

|

| 12 |

+

# Byte-compiled / optimized / DLL files

|

| 13 |

+

__pycache__/

|

| 14 |

+

*.py[cod]

|

| 15 |

+

*$py.class

|

| 16 |

+

|

| 17 |

+

# C extensions

|

| 18 |

+

*.so

|

| 19 |

+

|

| 20 |

+

# Distribution / packaging

|

| 21 |

+

.Python

|

| 22 |

+

build/

|

| 23 |

+

develop-eggs/

|

| 24 |

+

dist/

|

| 25 |

+

downloads/

|

| 26 |

+

eggs/

|

| 27 |

+

.eggs/

|

| 28 |

+

lib/

|

| 29 |

+

lib64/

|

| 30 |

+

parts/

|

| 31 |

+

sdist/

|

| 32 |

+

var/

|

| 33 |

+

wheels/

|

| 34 |

+

share/python-wheels/

|

| 35 |

+

*.egg-info/

|

| 36 |

+

.installed.cfg

|

| 37 |

+

*.egg

|

| 38 |

+

MANIFEST

|

| 39 |

+

|

| 40 |

+

# PyInstaller

|

| 41 |

+

# Usually these files are written by a python script from a template

|

| 42 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 43 |

+

*.manifest

|

| 44 |

+

*.spec

|

| 45 |

+

|

| 46 |

+

# Installer logs

|

| 47 |

+

pip-log.txt

|

| 48 |

+

pip-delete-this-directory.txt

|

| 49 |

+

|

| 50 |

+

# Unit test / coverage reports

|

| 51 |

+

htmlcov/

|

| 52 |

+

.tox/

|

| 53 |

+

.nox/

|

| 54 |

+

.coverage

|

| 55 |

+

.coverage.*

|

| 56 |

+

.cache

|

| 57 |

+

nosetests.xml

|

| 58 |

+

coverage.xml

|

| 59 |

+

*.cover

|

| 60 |

+

*.py,cover

|

| 61 |

+

.hypothesis/

|

| 62 |

+

.pytest_cache/

|

| 63 |

+

cover/

|

| 64 |

+

|

| 65 |

+

# PyBuilder

|

| 66 |

+

.pybuilder/

|

| 67 |

+

target/

|

| 68 |

+

|

| 69 |

+

# Jupyter Notebook

|

| 70 |

+

.ipynb_checkpoints

|

| 71 |

+

|

| 72 |

+

# IPython

|

| 73 |

+

profile_default/

|

| 74 |

+

ipython_config.py

|

| 75 |

+

|

| 76 |

+

# pyenv

|

| 77 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 78 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 79 |

+

# .python-version

|

| 80 |

+

|

| 81 |

+

# pipenv

|

| 82 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 83 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 84 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 85 |

+

# install all needed dependencies.

|

| 86 |

+

#Pipfile.lock

|

| 87 |

+

|

| 88 |

+

# UV

|

| 89 |

+

# Similar to Pipfile.lock, it is generally recommended to include uv.lock in version control.

|

| 90 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 91 |

+

# commonly ignored for libraries.

|

| 92 |

+

#uv.lock

|

| 93 |

+

|

| 94 |

+

# poetry

|

| 95 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 96 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 97 |

+

# commonly ignored for libraries.

|

| 98 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 99 |

+

#poetry.lock

|

| 100 |

+

|

| 101 |

+

# pdm

|

| 102 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 103 |

+

#pdm.lock

|

| 104 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 105 |

+

# in version control.

|

| 106 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 107 |

+

.pdm.toml

|

| 108 |

+

.pdm-python

|

| 109 |

+

.pdm-build/

|

| 110 |

+

|

| 111 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 112 |

+

__pypackages__/

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

# SageMath parsed files

|

| 116 |

+

*.sage.py

|

| 117 |

+

|

| 118 |

+

# Environments

|

| 119 |

+

.env

|

| 120 |

+

.venv

|

| 121 |

+

env/

|

| 122 |

+

venv/

|

| 123 |

+

ENV/

|

| 124 |

+

env.bak/

|

| 125 |

+

venv.bak/

|

| 126 |

+

|

| 127 |

+

# Spyder project settings

|

| 128 |

+

.spyderproject

|

| 129 |

+

.spyproject

|

| 130 |

+

|

| 131 |

+

# Rope project settings

|

| 132 |

+

.ropeproject

|

| 133 |

+

|

| 134 |

+

# mkdocs documentation

|

| 135 |

+

/site

|

| 136 |

+

|

| 137 |

+

# mypy

|

| 138 |

+

.mypy_cache/

|

| 139 |

+

.dmypy.json

|

| 140 |

+

dmypy.json

|

| 141 |

+

|

| 142 |

+

# Pyre type checker

|

| 143 |

+

.pyre/

|

| 144 |

+

|

| 145 |

+

# pytype static type analyzer

|

| 146 |

+

.pytype/

|

| 147 |

+

|

| 148 |

+

# Cython debug symbols

|

| 149 |

+

cython_debug/

|

| 150 |

+

|

| 151 |

+

.idea/

|

| 152 |

+

|

| 153 |

+

# Ruff stuff:

|

| 154 |

+

.ruff_cache/

|

| 155 |

+

|

| 156 |

+

# PyPI configuration file

|

| 157 |

+

.pypirc

|

| 158 |

+

|

| 159 |

+

# Datasets, logs, plots, etc.

|

| 160 |

+

outputs/

|

| 161 |

+

models/*

|

| 162 |

+

|

| 163 |

+

*.arrow

|

| 164 |

+

*.png

|

| 165 |

+

*.pt

|

| 166 |

+

*.pdf

|

| 167 |

+

*.gif

|

README.md

CHANGED

|

@@ -1,2 +1,128 @@

|

|

| 1 |

-

# TempoPFN

|

| 2 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting

|

| 2 |

+

|

| 3 |

+

[](https://arxiv.org/abs/2510.25502)

|

| 4 |

+

[](https://github.com/automl/TempoPFN/blob/main/LICENSE)

|

| 5 |

+

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

**TempoPFN** introduced in [TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting](https://arxiv.org/abs/2510.25502), is a univariate time series foundation model pretrained **entirely on synthetic data**. It delivers top-tier zero-shot forecasting accuracy while remaining fully reproducible and free from real-data leakage.

|

| 9 |

+

|

| 10 |

+

Built on a **Linear RNN (GatedDeltaProduct)** backbone, TempoPFN performs end-to-end forecasting without patching or windowing. Its design enables fully parallelizable training and inference while maintaining stable temporal state-tracking across long sequences.

|

| 11 |

+

|

| 12 |

+

This repository includes the [**pretrained 35M parameter model,**](https://www.dropbox.com/scl/fi/5vmjr7nx9wj9w1vl2giuv/checkpoint.pth?rlkey=qmk08ojp7wj0l6kpm8hzgbzju&st=dyr07d00&dl=0), all training and inference code, and the **complete synthetic data generation pipeline** used for pretraining.

|

| 13 |

+

|

| 14 |

+

## ✨ Why TempoPFN?

|

| 15 |

+

|

| 16 |

+

* **High Performance, No Real Data:** Achieves top-tier competitive results on **GIFT-Eval, outperforming all existing synthetic-only approaches** and **surpassing the vast majority of models trained on real-world data**. This ensures full reproducibility and eliminates benchmark leakage.

|

| 17 |

+

* **Parallel and Efficient:** The linear recurrence design enables full-sequence parallelization. This gives us the best of both worlds: the linear efficiency of an RNN, but with the training parallelism of a Transformer.

|

| 18 |

+

* **Open and Reproducible:** Includes the full synthetic data pipeline, configurations, and scripts to reproduce training from scratch.

|

| 19 |

+

* **State-Tracking Stability:** The GatedDeltaProduct recurrence and *state-weaving* mechanism preserve temporal continuity and information flow across long horizons, improving robustness without non-linear recurrence.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## ⚙️ Installation

|

| 25 |

+

|

| 26 |

+

```bash

|

| 27 |

+

git clone https://github.com/automl/TempoPFN.git

|

| 28 |

+

cd TempoPFN

|

| 29 |

+

python -m venv venv && source venv/bin/activate

|

| 30 |

+

|

| 31 |

+

# 1. Install PyTorch first (see PyTorch website for your specific CUDA version)

|

| 32 |

+

# Example for CUDA 12.6:

|

| 33 |

+

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126

|

| 34 |

+

|

| 35 |

+

# 2. Install TempoPFN and all other dependencies

|

| 36 |

+

pip install .

|

| 37 |

+

export PYTHONPATH=$PWD

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

## 🚀 Quick Start: Run the Demo

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

**Prerequisites:**

|

| 45 |

+

* You must have a **CUDA-capable GPU** with a matching PyTorch version installed.

|

| 46 |

+

* You have run `export PYTHONPATH=$PWD` from the repo's root directory (see Installation).

|

| 47 |

+

|

| 48 |

+

### 1. Run the Quick Start Script

|

| 49 |

+

|

| 50 |

+

Run a demo forecast on a synthetic sine wave:

|

| 51 |

+

```bash

|

| 52 |

+

python examples/quick_start_tempo_pfn.py

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

### 2. Run with a Local Checkpoint

|

| 56 |

+

|

| 57 |

+

If you have already downloaded the model (e.g., to `models/checkpoint.pth`), you can point the script to it:

|

| 58 |

+

```bash

|

| 59 |

+

python examples/quick_start_tempo_pfn.py --checkpoint models/checkpoint.pth

|

| 60 |

+

```

|

| 61 |

+

|

| 62 |

+

### 3. Run the Notebook version

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

jupyter notebook examples/quick_start_tempo_pfn.ipynb

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

### Hardware & Performance Tips

|

| 69 |

+

|

| 70 |

+

**GPU Required:** Inference requires a CUDA-capable GPU. Tested on NVIDIA A100/H100.

|

| 71 |

+

|

| 72 |

+

**Triton Caches:** To prevent slowdowns from writing caches to a network filesystem, route caches to a local directory (like `/tmp`) before running:

|

| 73 |

+

```bash

|

| 74 |

+

LOCAL_CACHE_BASE="${TMPDIR:-/tmp}/tsf-$(date +%s)"

|

| 75 |

+

mkdir -p "${LOCAL_CACHE_BASE}/triton" "${LOCAL_CACHE_BASE}/torchinductor"

|

| 76 |

+

export TRITON_CACHE_DIR="${LOCAL_CACHE_BASE}/triton"

|

| 77 |

+

export TORCHINDUCTOR_CACHE_DIR="${LOCAL_CACHE_BASE}/torchinductor"

|

| 78 |

+

|

| 79 |

+

python examples/quick_start_tempo_pfn.py

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

## 🚂 Training

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

### Single-GPU Training (for debugging)

|

| 86 |

+

```bash

|

| 87 |

+

torchrun --standalone --nproc_per_node=1 src/training/trainer_dist.py --config ./configs/train.yaml

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

### Multi-GPU Training (Single-Node)

|

| 91 |

+

|

| 92 |

+

This example uses 8 GPUs. The training script uses PyTorch DistributedDataParallel (DDP).

|

| 93 |

+

```bash

|

| 94 |

+

torchrun --standalone --nproc_per_node=8 src/training/trainer_dist.py --config ./configs/train.yaml

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

### Configuration

|

| 98 |

+

|

| 99 |

+

All training and model parameters are controlled via YAML files in `configs/` (architecture, optimizers, paths).

|

| 100 |

+

|

| 101 |

+

## 💾 Synthetic Data Generation

|

| 102 |

+

|

| 103 |

+

A core contribution of this work is our open-source synthetic data pipeline, located in `src/synthetic_generation/`. It combines diverse generators with a powerful augmentation cascade.

|

| 104 |

+

|

| 105 |

+

**Generators Used:**

|

| 106 |

+

|

| 107 |

+

* **Adapted Priors:** ForecastPFN, KernelSynth, GaussianProcess (GP), and CauKer (Structural Causal Models).

|

| 108 |

+

* **Novel Priors:** SDE (a flexible regime-switching Ornstein-Uhlenbeck process), Sawtooth, StepFunction, Anomaly, Spikes, SineWave, and Audio-Inspired generators (Stochastic Rhythms, Financial Volatility, Network Topology, Multi-Scale Fractals).

|

| 109 |

+

|

| 110 |

+

You can easily generate your own data by instantiating a generator wrapper. See `examples/generate_synthetic_data.py` for a minimal script, or inspect the generator code in `src/synthetic_generation/`.

|

| 111 |

+

|

| 112 |

+

## 🤝 License

|

| 113 |

+

|

| 114 |

+

This project is licensed under the Apache 2.0 License. See the LICENSE file for details. This permissive license allows for both academic and commercial use.

|

| 115 |

+

|

| 116 |

+

## 📚 Citation

|

| 117 |

+

|

| 118 |

+

If you find TempoPFN useful in your research, please consider citing our paper:

|

| 119 |

+

```bibtex

|

| 120 |

+

@misc{moroshan2025tempopfn,

|

| 121 |

+

title={TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting},

|

| 122 |

+

author={Vladyslav Moroshan and Julien Siems and Arber Zela and Timur Carstensen and Frank Hutter},

|

| 123 |

+

year={2025},

|

| 124 |

+

eprint={2510.25502},

|

| 125 |

+

archivePrefix={arXiv},

|

| 126 |

+

primaryClass={cs.LG}

|

| 127 |

+

}

|

| 128 |

+

```

|

configs/example.yaml

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

train_data_path: null # Replace with the path to root of the training data directory with subdirectories for each generator (e.g. gp, kernel, etc.)

|

| 2 |

+

model_path: ./models # Path where the model will be saved

|

| 3 |

+

model_name: TempoPFN

|

| 4 |

+

continue_training: false

|

| 5 |

+

checkpoint_path: null # Replace with the path to the checkpoint file

|

| 6 |

+

seed: 2025

|

| 7 |

+

wandb: true # whether to log to wandb

|

| 8 |

+

wandb_project_name: TempoPFNTraining

|

| 9 |

+

wandb_entity: university-of-freiburg-2024

|

| 10 |

+

wandb_plots: false

|

| 11 |

+

|

| 12 |

+

batch_size: 40

|

| 13 |

+

num_training_iterations: 1000000 # 1M

|

| 14 |

+

validation_batch_size: 64

|

| 15 |

+

num_validation_batches: 1

|

| 16 |

+

num_workers: 4

|

| 17 |

+

gradient_accumulation_enabled: true

|

| 18 |

+

accumulation_steps: 5 # Number of batches to accumulate before updating (effective batch size = batch_size * accumulation_steps)

|

| 19 |

+

log_interval: 2048

|

| 20 |

+

save_every: 100000

|

| 21 |

+

|

| 22 |

+

generator_proportions:

|

| 23 |

+

forecast_pfn: 1.0

|

| 24 |

+

gp: 1.0

|

| 25 |

+

kernel: 1.0

|

| 26 |

+

sawtooth: 1.0

|

| 27 |

+

sinewave: 1.0

|

| 28 |

+

step: 1.0

|

| 29 |

+

anomaly: 1.0

|

| 30 |

+

spike: 1.0

|

| 31 |

+

cauker_univariate: 1.0

|

| 32 |

+

ou_process: 3.0

|

| 33 |

+

audio_financial_volatility: 0.1

|

| 34 |

+

audio_multi_scale_fractal: 0.1

|

| 35 |

+

audio_network_topology: 0.5

|

| 36 |

+

audio_stochastic_rhythm: 0.5

|

| 37 |

+

augmented_per_sample_2048: 2.0

|

| 38 |

+

augmented_temp_batch_2048: 2.0

|

| 39 |

+

|

| 40 |

+

# Learning Rate Scheduler Configuration

|

| 41 |

+

lr_scheduler: cosine # Options: "warmup_stable_decay", "cosine_with_warmup", "cosine_with_restarts", "cosine"

|

| 42 |

+

|

| 43 |

+

# Learning Rate Parameters

|

| 44 |

+

peak_lr: 0.0002 # 2e-4 - Peak learning rate

|

| 45 |

+

min_lr_ratio: 0.01 # Minimum LR as fraction of peak LR

|

| 46 |

+

|

| 47 |

+

# WSD Scheduler Specific Parameters

|

| 48 |

+

warmup_ratio: 0.003 # 0.3% of total steps for warmup

|

| 49 |

+

stable_ratio: 0.90 # 90% of total steps at stable learning rate

|

| 50 |

+

decay_type: cosine # Type of decay: "cosine" or "linear"

|

| 51 |

+

|

| 52 |

+

# Alternative Scheduler Parameters (if using different schedulers)

|

| 53 |

+

num_cycles: 0.5 # For cosine_with_warmup: 0.5 = half cosine wave

|

| 54 |

+

num_restart_cycles: 4 # For cosine_with_restarts: number of restart cycles

|

| 55 |

+

|

| 56 |

+

# Optimizer Configuration

|

| 57 |

+

weight_decay: 0.01 # Weight decay for AdamW

|

| 58 |

+

beta1: 0.9 # Adam beta1 parameter

|

| 59 |

+

beta2: 0.98 # Adam beta2 parameter (optimized for transformers)

|

| 60 |

+

optimizer_eps: 1e-6 # Adam epsilon

|

| 61 |

+

|

| 62 |

+

# Training Stability

|

| 63 |

+

gradient_clip_val: 100.0

|

| 64 |

+

scaler: custom_robust

|

| 65 |

+

|

| 66 |

+

gift_eval:

|

| 67 |

+

evaluate_on_gift_eval: false

|

| 68 |

+

max_context_length: 3072

|

| 69 |

+

create_plots: false

|

| 70 |

+

max_plots: 5

|

| 71 |

+

dataset_storage_path: null # Replace with the path to the dataset storage path

|

| 72 |

+

|

| 73 |

+

data_augmentation:

|

| 74 |

+

nan_augmentation: true

|

| 75 |

+

scaler_augmentation: false

|

| 76 |

+

length_shortening: true

|

| 77 |

+

nan_stats_path: ./data/nan_stats.json

|

| 78 |

+

|

| 79 |

+

augmentation_probabilities:

|

| 80 |

+

scaler_augmentation: 0.5

|

| 81 |

+

|

| 82 |

+

TimeSeriesModel:

|

| 83 |

+

# Core architecture

|

| 84 |

+

embed_size: 512

|

| 85 |

+

num_encoder_layers: 10

|

| 86 |

+

|

| 87 |

+

# Scaling and preprocessing

|

| 88 |

+

scaler: custom_robust

|

| 89 |

+

epsilon: 0.00001

|

| 90 |

+

scaler_clamp_value: null

|

| 91 |

+

handle_constants: false

|

| 92 |

+

|

| 93 |

+

# Time features

|

| 94 |

+

K_max: 25

|

| 95 |

+

time_feature_config:

|

| 96 |

+

use_enhanced_features: true

|

| 97 |

+

use_holiday_features: false

|

| 98 |

+

use_index_features: true

|

| 99 |

+

include_seasonality_info: true

|

| 100 |

+

|

| 101 |

+

drop_enc_allow: false

|

| 102 |

+

encoding_dropout: 0.0

|

| 103 |

+

|

| 104 |

+

# Encoder configuration

|

| 105 |

+

encoder_config:

|

| 106 |

+

attn_mode: chunk

|

| 107 |

+

num_heads: 4

|

| 108 |

+

expand_v: 1.0

|

| 109 |

+

use_gate: false

|

| 110 |

+

use_short_conv: true

|

| 111 |

+

conv_size: 16

|

| 112 |

+

allow_neg_eigval: true

|

| 113 |

+

use_forget_gate: true

|

| 114 |

+

num_householder: 4

|

| 115 |

+

weaving: true

|

| 116 |

+

|

| 117 |

+

loss_type: 'quantile'

|

| 118 |

+

quantiles: [0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

|

data/nan_stats.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/generate_synthetic_data.py

ADDED

|

@@ -0,0 +1,324 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

import os

|

| 3 |

+

from typing import Optional

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

|

| 7 |

+

from src.data.containers import BatchTimeSeriesContainer

|

| 8 |

+

from src.data.utils import sample_future_length

|

| 9 |

+

from src.plotting.plot_multivariate_timeseries import plot_from_container

|

| 10 |

+

from src.synthetic_generation.anomalies.anomaly_generator_wrapper import (

|

| 11 |

+

AnomalyGeneratorWrapper,

|

| 12 |

+

)

|

| 13 |

+

from src.synthetic_generation.audio_generators.financial_volatility_wrapper import (

|

| 14 |

+

FinancialVolatilityAudioWrapper,

|

| 15 |

+

)

|

| 16 |

+

from src.synthetic_generation.audio_generators.multi_scale_fractal_wrapper import (

|

| 17 |

+

MultiScaleFractalAudioWrapper,

|

| 18 |

+

)

|

| 19 |

+

from src.synthetic_generation.audio_generators.network_topology_wrapper import (

|

| 20 |

+

NetworkTopologyAudioWrapper,

|

| 21 |

+

)

|

| 22 |

+

from src.synthetic_generation.audio_generators.stochastic_rhythm_wrapper import (

|

| 23 |

+

StochasticRhythmAudioWrapper,

|

| 24 |

+

)

|

| 25 |

+

from src.synthetic_generation.cauker.cauker_generator_wrapper import (

|

| 26 |

+

CauKerGeneratorWrapper,

|

| 27 |

+

)

|

| 28 |

+

from src.synthetic_generation.forecast_pfn_prior.forecast_pfn_generator_wrapper import (

|

| 29 |

+

ForecastPFNGeneratorWrapper,

|

| 30 |

+

)

|

| 31 |

+

from src.synthetic_generation.generator_params import (

|

| 32 |

+

AnomalyGeneratorParams,

|

| 33 |

+

CauKerGeneratorParams,

|

| 34 |

+

FinancialVolatilityAudioParams,

|

| 35 |

+

ForecastPFNGeneratorParams,

|

| 36 |

+

GPGeneratorParams,

|

| 37 |

+

KernelGeneratorParams,

|

| 38 |

+

MultiScaleFractalAudioParams,

|

| 39 |

+

NetworkTopologyAudioParams,

|

| 40 |

+

OrnsteinUhlenbeckProcessGeneratorParams,

|

| 41 |

+

SawToothGeneratorParams,

|

| 42 |

+

SineWaveGeneratorParams,

|

| 43 |

+

SpikesGeneratorParams,

|

| 44 |

+

StepGeneratorParams,

|

| 45 |

+

StochasticRhythmAudioParams,

|

| 46 |

+

)

|

| 47 |

+

from src.synthetic_generation.gp_prior.gp_generator_wrapper import (

|

| 48 |

+

GPGeneratorWrapper,

|

| 49 |

+

)

|

| 50 |

+

from src.synthetic_generation.kernel_synth.kernel_generator_wrapper import (

|

| 51 |

+

KernelGeneratorWrapper,

|

| 52 |

+

)

|

| 53 |

+

from src.synthetic_generation.ornstein_uhlenbeck_process.ou_generator_wrapper import (

|

| 54 |

+

OrnsteinUhlenbeckProcessGeneratorWrapper,

|

| 55 |

+

)

|

| 56 |

+

from src.synthetic_generation.sawtooth.sawtooth_generator_wrapper import (

|

| 57 |

+

SawToothGeneratorWrapper,

|

| 58 |

+

)

|

| 59 |

+

from src.synthetic_generation.sine_waves.sine_wave_generator_wrapper import (

|

| 60 |

+

SineWaveGeneratorWrapper,

|

| 61 |

+

)

|

| 62 |

+

from src.synthetic_generation.spikes.spikes_generator_wrapper import (

|

| 63 |

+

SpikesGeneratorWrapper,

|

| 64 |

+

)

|

| 65 |

+

from src.synthetic_generation.steps.step_generator_wrapper import (

|

| 66 |

+

StepGeneratorWrapper,

|

| 67 |

+

)

|

| 68 |

+

|

| 69 |

+

# Configure logging

|

| 70 |

+

logging.basicConfig(

|

| 71 |

+

level=logging.INFO, format="%(asctime)s - %(levelname)s - %(message)s"

|

| 72 |

+

)

|

| 73 |

+

logger = logging.getLogger(__name__)

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def visualize_batch_sample(

|

| 77 |

+

generator,

|

| 78 |

+

batch_size: int = 8,

|

| 79 |

+

output_dir: str = "outputs/plots",

|

| 80 |

+

sample_idx: Optional[int] = None,

|

| 81 |

+

prefix: str = "",

|

| 82 |

+

seed: Optional[int] = None,

|

| 83 |

+

) -> None:

|

| 84 |

+

"""

|

| 85 |

+

Visualize a sample from a batch of synthetic multivariate time series from any generator.

|

| 86 |

+

Also plot artificial predictions for demonstration if requested.

|

| 87 |

+

|

| 88 |

+

Args:

|

| 89 |

+

generator: Any generator wrapper (LMC, Kernel, GP, etc.)

|

| 90 |

+

batch_size: Number of samples to generate in the batch

|

| 91 |

+

output_dir: Directory to save plots

|

| 92 |

+

sample_idx: Index of the sample to visualize

|

| 93 |

+

seed: Seed for the generator

|

| 94 |

+

"""

|

| 95 |

+

os.makedirs(output_dir, exist_ok=True)

|

| 96 |

+

|

| 97 |

+

generator_name = generator.__class__.__name__

|

| 98 |

+

logger.info(f"[{generator_name}] Generating batch of size {batch_size}")

|

| 99 |

+

|

| 100 |

+

batch = generator.generate_batch(batch_size=batch_size, seed=seed)

|

| 101 |

+

values = torch.from_numpy(batch.values)

|

| 102 |

+

if values.ndim == 2:

|

| 103 |

+

values = values.unsqueeze(-1) # Add channel dimension: [batch_size, seq_len, 1]

|

| 104 |

+

|

| 105 |

+

future_length = sample_future_length(range="gift_eval")

|

| 106 |

+

# Slice along the time dimension (dimension 1)

|

| 107 |

+

history_values = values[:, :-future_length, :]

|

| 108 |

+

future_values = values[:, -future_length:, :]

|

| 109 |

+

|

| 110 |

+

batch = BatchTimeSeriesContainer(

|

| 111 |

+

history_values=history_values,

|

| 112 |

+

future_values=future_values,

|

| 113 |

+

start=batch.start,

|

| 114 |

+

frequency=batch.frequency,

|

| 115 |

+

)

|

| 116 |

+

|

| 117 |

+

logger.info(

|

| 118 |

+

f"[{generator_name}] Batch history values shape: {batch.history_values.shape}"

|

| 119 |

+

)

|

| 120 |

+

logger.info(

|

| 121 |

+

f"[{generator_name}] Batch future values shape: {batch.future_values.shape}"

|

| 122 |

+

)

|

| 123 |

+

logger.info(f"[{generator_name}] Batch start: {batch.start}")

|

| 124 |

+

logger.info(f"[{generator_name}] Batch frequency: {batch.frequency}")

|

| 125 |

+

|

| 126 |

+

if sample_idx is None:

|

| 127 |

+

for sample_idx in range(batch_size):

|

| 128 |

+

filename = f"{prefix}_{generator_name.lower().replace('generatorwrapper', '')}_sample_{sample_idx}.png"

|

| 129 |

+

output_file = os.path.join(output_dir, filename)

|

| 130 |

+

title = f"{prefix.capitalize()} {generator_name.replace('GeneratorWrapper', '')} Synthetic Time Series (Sample {sample_idx})"

|

| 131 |

+

|

| 132 |

+

plot_from_container(

|

| 133 |

+

batch=batch,

|

| 134 |

+

sample_idx=sample_idx,

|

| 135 |

+

output_file=output_file,

|

| 136 |

+

show=False,

|

| 137 |

+

title=title,

|

| 138 |

+

)

|

| 139 |

+

logger.info(

|

| 140 |

+

f"[{generator_name}] Saved plot for sample {sample_idx} to {output_file}"

|

| 141 |

+

)

|

| 142 |

+

logger.info("--------------------------------")

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

if __name__ == "__main__":

|

| 146 |

+

# Configuration

|

| 147 |

+

batch_size = 2

|

| 148 |

+

total_length = 2048

|

| 149 |

+

output_dir = "outputs/plots"

|

| 150 |

+

global_seed = 2025

|

| 151 |

+

|

| 152 |

+

logger.info(f"Saving plots to {output_dir}")

|

| 153 |

+

|

| 154 |

+

kernel_params_univariate = KernelGeneratorParams(

|

| 155 |

+

global_seed=global_seed,

|

| 156 |

+

length=total_length,

|

| 157 |

+

)

|

| 158 |

+

kernel_gen_univariate = KernelGeneratorWrapper(kernel_params_univariate)

|

| 159 |

+

|

| 160 |

+

gp_params_univariate = GPGeneratorParams(

|

| 161 |

+

global_seed=global_seed,

|

| 162 |

+

length=total_length,

|

| 163 |

+

)

|

| 164 |

+

gp_gen_univariate = GPGeneratorWrapper(gp_params_univariate)

|

| 165 |

+

|

| 166 |

+

forecast_pfn_univariate_params = ForecastPFNGeneratorParams(

|

| 167 |

+

global_seed=global_seed,

|

| 168 |

+

length=total_length,

|

| 169 |

+

)

|

| 170 |

+

forecast_pfn_univariate_gen = ForecastPFNGeneratorWrapper(

|

| 171 |

+

forecast_pfn_univariate_params

|

| 172 |

+

)

|

| 173 |

+

|

| 174 |

+

sine_wave_params = SineWaveGeneratorParams(

|

| 175 |

+

global_seed=global_seed,

|

| 176 |

+

length=total_length,

|

| 177 |

+

)

|

| 178 |

+

sine_wave_univariate_gen = SineWaveGeneratorWrapper(sine_wave_params)

|

| 179 |

+

|

| 180 |

+

sawtooth_params = SawToothGeneratorParams(

|

| 181 |

+

global_seed=global_seed,

|

| 182 |

+

length=total_length,

|

| 183 |

+

)

|

| 184 |

+

sawtooth_univariate_gen = SawToothGeneratorWrapper(sawtooth_params)

|

| 185 |

+

|

| 186 |

+

step_params = params = StepGeneratorParams(

|

| 187 |

+

length=2048,

|

| 188 |

+

global_seed=42,

|

| 189 |

+

)

|

| 190 |

+

step_gen_univariate = StepGeneratorWrapper(step_params)

|

| 191 |

+

|

| 192 |

+

anomaly_params = AnomalyGeneratorParams(

|

| 193 |

+

global_seed=global_seed,

|

| 194 |

+

length=total_length,

|

| 195 |

+

)

|

| 196 |

+

anomaly_gen_univariate = AnomalyGeneratorWrapper(anomaly_params)

|

| 197 |

+

|

| 198 |

+

spikes_params = SpikesGeneratorParams(

|

| 199 |

+

global_seed=global_seed,

|

| 200 |

+

length=total_length,

|

| 201 |

+

)

|

| 202 |

+

spikes_gen_univariate = SpikesGeneratorWrapper(spikes_params)

|

| 203 |

+

|

| 204 |

+

cauker_params_multivariate = CauKerGeneratorParams(

|

| 205 |

+

global_seed=global_seed,

|

| 206 |

+

length=total_length,

|

| 207 |

+

num_channels=5,

|

| 208 |

+

)

|

| 209 |

+

cauker_gen_multivariate = CauKerGeneratorWrapper(cauker_params_multivariate)

|

| 210 |

+

|

| 211 |

+

ou_params = OrnsteinUhlenbeckProcessGeneratorParams(

|

| 212 |

+

global_seed=global_seed,

|

| 213 |

+

length=total_length,

|

| 214 |

+

)

|

| 215 |

+

ou_gen_univariate = OrnsteinUhlenbeckProcessGeneratorWrapper(ou_params)

|

| 216 |

+

|

| 217 |

+

stochastic_rhythm_params = StochasticRhythmAudioParams(

|

| 218 |

+

global_seed=global_seed,

|

| 219 |

+

length=total_length,

|

| 220 |

+

)

|

| 221 |

+

stochastic_rhythm_gen_univariate = StochasticRhythmAudioWrapper(

|

| 222 |

+

stochastic_rhythm_params

|

| 223 |

+

)

|

| 224 |

+

|

| 225 |

+

financial_volatility_params = FinancialVolatilityAudioParams(

|

| 226 |

+

global_seed=global_seed,

|

| 227 |

+

length=total_length,

|

| 228 |

+

)

|

| 229 |

+

financial_volatility_gen_univariate = FinancialVolatilityAudioWrapper(

|

| 230 |

+

financial_volatility_params

|

| 231 |

+

)

|

| 232 |

+

|

| 233 |

+

multi_scale_fractal_params = MultiScaleFractalAudioParams(

|

| 234 |

+

global_seed=global_seed,

|

| 235 |

+

length=total_length,

|

| 236 |

+

)

|

| 237 |

+

multi_scale_fractal_gen_univariate = MultiScaleFractalAudioWrapper(

|

| 238 |

+

multi_scale_fractal_params

|

| 239 |

+

)

|

| 240 |

+

|

| 241 |

+

network_topology_params = NetworkTopologyAudioParams(

|

| 242 |

+

global_seed=global_seed,

|

| 243 |

+

length=total_length,

|

| 244 |

+

)

|

| 245 |

+

network_topology_gen_univariate = NetworkTopologyAudioWrapper(

|

| 246 |

+

network_topology_params

|

| 247 |

+

)

|

| 248 |

+

|

| 249 |

+

# Visualize samples from all generators

|

| 250 |

+

visualize_batch_sample(

|

| 251 |

+

kernel_gen_univariate, batch_size=batch_size, output_dir=output_dir

|

| 252 |

+

)

|

| 253 |

+

|

| 254 |

+

visualize_batch_sample(

|

| 255 |

+

gp_gen_univariate, batch_size=batch_size, output_dir=output_dir

|

| 256 |

+

)

|

| 257 |

+

|

| 258 |

+

visualize_batch_sample(

|

| 259 |

+

forecast_pfn_univariate_gen, batch_size=batch_size, output_dir=output_dir

|

| 260 |

+

)

|

| 261 |

+

|

| 262 |

+

visualize_batch_sample(

|

| 263 |

+

sine_wave_univariate_gen,

|

| 264 |

+

batch_size=batch_size,

|

| 265 |

+

output_dir=output_dir,

|

| 266 |

+

)

|

| 267 |

+

|

| 268 |

+

visualize_batch_sample(

|

| 269 |

+

sawtooth_univariate_gen,

|

| 270 |

+

batch_size=batch_size,

|

| 271 |

+

output_dir=output_dir,

|

| 272 |

+

)

|

| 273 |

+

|

| 274 |

+

visualize_batch_sample(

|

| 275 |

+

step_gen_univariate,

|

| 276 |

+

batch_size=batch_size,

|

| 277 |

+

output_dir=output_dir,

|

| 278 |

+

)

|

| 279 |

+

|

| 280 |

+

visualize_batch_sample(

|

| 281 |

+

anomaly_gen_univariate,

|

| 282 |

+

batch_size=batch_size,

|

| 283 |

+

output_dir=output_dir,

|

| 284 |

+

)

|

| 285 |

+

|

| 286 |

+

visualize_batch_sample(

|

| 287 |

+

spikes_gen_univariate,

|

| 288 |

+

batch_size=batch_size,

|

| 289 |

+

output_dir=output_dir,

|

| 290 |

+

)

|

| 291 |

+

|

| 292 |

+

visualize_batch_sample(

|

| 293 |

+

cauker_gen_multivariate,

|

| 294 |

+

batch_size=batch_size,

|

| 295 |

+

output_dir=output_dir,

|

| 296 |

+

prefix="multivariate",

|

| 297 |

+

)

|

| 298 |

+

|

| 299 |

+

visualize_batch_sample(

|

| 300 |

+

ou_gen_univariate,

|

| 301 |

+

batch_size=batch_size,

|

| 302 |

+

output_dir=output_dir,

|

| 303 |

+

seed=global_seed,

|

| 304 |

+

)

|

| 305 |

+

|

| 306 |

+

visualize_batch_sample(

|

| 307 |

+

stochastic_rhythm_gen_univariate, batch_size=batch_size, output_dir=output_dir

|

| 308 |

+

)

|

| 309 |

+

|

| 310 |

+

visualize_batch_sample(

|

| 311 |

+

financial_volatility_gen_univariate,

|

| 312 |

+

batch_size=batch_size,

|

| 313 |

+

output_dir=output_dir,

|

| 314 |

+

)

|

| 315 |

+

|

| 316 |

+

visualize_batch_sample(

|

| 317 |

+

multi_scale_fractal_gen_univariate,

|

| 318 |

+

batch_size=batch_size,

|

| 319 |

+

output_dir=output_dir,

|

| 320 |

+

)

|

| 321 |

+

|

| 322 |

+

visualize_batch_sample(

|

| 323 |

+

network_topology_gen_univariate, batch_size=batch_size, output_dir=output_dir

|

| 324 |

+

)

|

examples/quick_start_tempo_pfn.ipynb

ADDED

|

@@ -0,0 +1,286 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "231c6227",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# Quick Start: Univariate Quantile Forecasting (CUDA, bfloat16)\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"This notebook demonstrates how to:\n",

|

| 11 |

+

"- Generate synthetic sine wave time series data\n",

|

| 12 |

+

"- Pack data into `BatchTimeSeriesContainer`\n",

|

| 13 |

+

"- Load a pretrained model (from Dropbox)\n",

|

| 14 |

+

"- Run inference with bfloat16 on CUDA\n",

|

| 15 |

+

"- Visualize predictions\n"

|

| 16 |

+

]

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"cell_type": "markdown",

|

| 20 |

+

"id": "bb6c5424-1c63-4cb0-a818-45d4199914e5",

|

| 21 |

+

"metadata": {},

|

| 22 |

+

"source": [

|

| 23 |

+

"## 1) Setup"

|

| 24 |

+

]

|

| 25 |

+

},

|

| 26 |

+

{

|

| 27 |

+

"cell_type": "code",

|

| 28 |

+

"execution_count": null,

|