Vladyslav Moroshan

commited on

Commit

·

c4b87d2

1

Parent(s):

5af912c

Initial upload of TempoPFN model, code, and weights

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .vscode/settings.json +2 -0

- LICENSE +201 -0

- README.md +151 -3

- configs/example.yaml +119 -0

- data/dataset_properties.json +152 -0

- data/nan_stats.json +0 -0

- examples/generate_synthetic_data.py +204 -0

- examples/gift_eval/gift_eval_runner.py +251 -0

- examples/gift_eval/gift_eval_submission.ipynb +1439 -0

- examples/quick_start_tempo_pfn.ipynb +280 -0

- examples/quick_start_tempo_pfn.py +101 -0

- examples/utils.py +115 -0

- gitignore +167 -0

- models/checkpoint_38M.pth +3 -0

- pyproject.toml +62 -0

- requirements.txt +25 -0

- src/__init__.py +0 -0

- src/data/__init__.py +0 -0

- src/data/augmentations.py +1318 -0

- src/data/batch_composer.py +705 -0

- src/data/constants.py +25 -0

- src/data/containers.py +204 -0

- src/data/datasets.py +267 -0

- src/data/filter.py +73 -0

- src/data/frequency.py +538 -0

- src/data/loaders.py +661 -0

- src/data/scalers.py +360 -0

- src/data/time_features.py +564 -0

- src/data/utils.py +75 -0

- src/gift_eval/__init__.py +15 -0

- src/gift_eval/constants.py +186 -0

- src/gift_eval/core.py +64 -0

- src/gift_eval/data.py +234 -0

- src/gift_eval/evaluate.py +421 -0

- src/gift_eval/predictor.py +318 -0

- src/gift_eval/results.py +243 -0

- src/models/__init__.py +0 -0

- src/models/blocks.py +62 -0

- src/models/gated_deltaproduct/README.md +344 -0

- src/models/gated_deltaproduct/__init__.py +11 -0

- src/models/gated_deltaproduct/configuration_gated_deltaproduct.py +108 -0

- src/models/gated_deltaproduct/gated_deltaproduct.py +351 -0

- src/models/gated_deltaproduct/modeling_gated_deltaproduct.py +105 -0

- src/models/model.py +427 -0

- src/optim/lr_scheduler.py +360 -0

- src/plotting/__init__.py +0 -0

- src/plotting/gift_eval_utils.py +215 -0

- src/plotting/plot_timeseries.py +292 -0

- src/synthetic_generation/__init__.py +0 -0

- src/synthetic_generation/abstract_classes.py +97 -0

.vscode/settings.json

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

}

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,3 +1,151 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

library_name: tempo-pfn

|

| 4 |

+

tags:

|

| 5 |

+

- time-series-forecasting

|

| 6 |

+

- zero-shot

|

| 7 |

+

- rnn

|

| 8 |

+

- linear-rnn

|

| 9 |

+

- synthetic-data

|

| 10 |

+

- foundation-model

|

| 11 |

+

- automl

|

| 12 |

+

arxiv: 2510.25502

|

| 13 |

+

---

|

| 14 |

+

|

| 15 |

+

# TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting

|

| 16 |

+

|

| 17 |

+

[](https://arxiv.org/abs/2510.25502) [](https://github.com/automl/TempoPFN/blob/main/LICENSE)

|

| 18 |

+

|

| 19 |

+

---

|

| 20 |

+

|

| 21 |

+

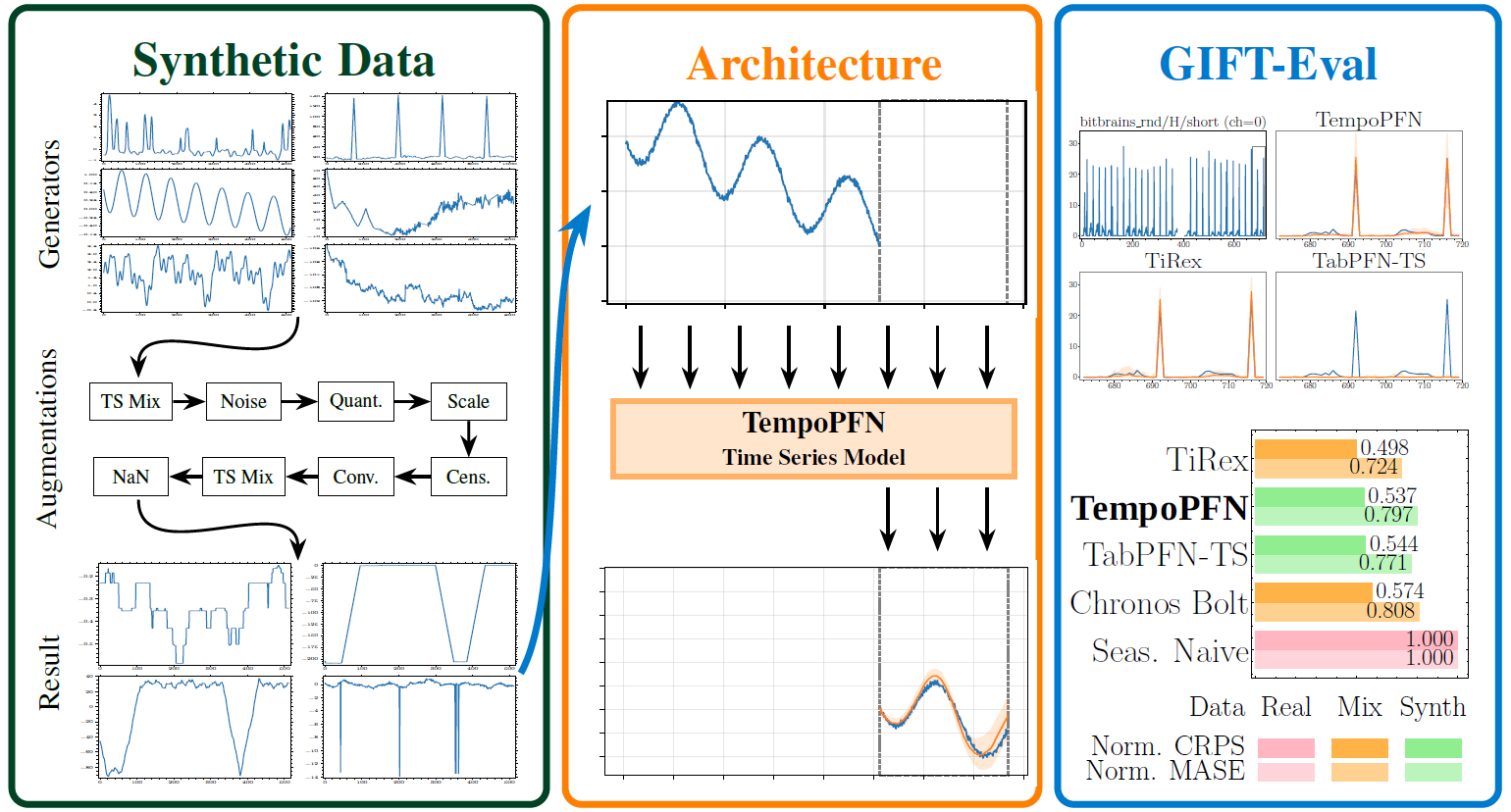

**TempoPFN** introduced in [TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting](https://arxiv.org/abs/2510.25502), is a univariate time series foundation model pretrained **entirely on synthetic data**. It delivers top-tier zero-shot forecasting accuracy while remaining fully reproducible and free from real-data leakage.

|

| 22 |

+

|

| 23 |

+

Built on a **Linear RNN (GatedDeltaProduct)** backbone, TempoPFN performs end-to-end forecasting without patching or windowing. Its design enables fully parallelizable training and inference while maintaining stable temporal state-tracking across long sequences. The GatedDeltaProduct architecture is based on [DeltaProduct](https://arxiv.org/html/2502.10297v3), extended with state-weaving for time series forecasting. For detailed information about the architecture and custom modifications, see [`src/models/gated_deltaproduct/README.md`](src/models/gated_deltaproduct/README.md).

|

| 24 |

+

|

| 25 |

+

This repository includes the **pretrained 38M parameter model** (`models/checkpoint_38M.pth`), all training and inference code, and the **complete synthetic data generation pipeline** used for pretraining.

|

| 26 |

+

|

| 27 |

+

## ✨ Why TempoPFN?

|

| 28 |

+

|

| 29 |

+

* **High Performance, No Real Data:** Achieves top-tier competitive results on **GIFT-Eval, outperforming all existing synthetic-only approaches** and **surpassing the vast majority of models trained on real-world data**. This ensures full reproducibility and eliminates benchmark leakage.

|

| 30 |

+

* **Parallel and Efficient:** The linear recurrence design enables full-sequence parallelization. This gives us the best of both worlds: the linear efficiency of an RNN, but with the training parallelism of a Transformer.

|

| 31 |

+

* **Open and Reproducible:** Includes the full synthetic data pipeline, configurations, and scripts to reproduce training from scratch.

|

| 32 |

+

* **State-Tracking Stability:** The GatedDeltaProduct recurrence and *state-weaving* mechanism preserve temporal continuity and information flow across long horizons, improving robustness without non-linear recurrence.

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

## ⚙️ Installation

|

| 37 |

+

|

| 38 |

+

> **Note on Model Weights:** This repository uses [Git LFS](https://git-lfs.github.com/) to store the model checkpoint (`.pth` file). You **must** have Git LFS installed to clone the repository correctly.

|

| 39 |

+

>

|

| 40 |

+

> ```bash

|

| 41 |

+

> # Install Git LFS (e.g., on Ubuntu)

|

| 42 |

+

> sudo apt-get install git-lfs

|

| 43 |

+

> git lfs install

|

| 44 |

+

> ```

|

| 45 |

+

|

| 46 |

+

1. **Clone the repository:**

|

| 47 |

+

```bash

|

| 48 |

+

git clone https://huggingface.co/AutoML-org/TempoPFN

|

| 49 |

+

cd TempoPFN

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

2. **Set up the environment:**

|

| 53 |

+

```bash

|

| 54 |

+

python -m venv venv && source venv/bin/activate

|

| 55 |

+

|

| 56 |

+

# 1. Install PyTorch version matching your CUDA version

|

| 57 |

+

# Example for CUDA 12.8:

|

| 58 |

+

pip install torch --index-url https://download.pytorch.org/whl/cu128

|

| 59 |

+

|

| 60 |

+

# 2. Install TempoPFN and all other dependencies

|

| 61 |

+

pip install -r requirements.txt

|

| 62 |

+

export PYTHONPATH=$PWD

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

## 🚀 Quick Start: Run the Demo

|

| 66 |

+

|

| 67 |

+

**Prerequisites:**

|

| 68 |

+

* You must have a **CUDA-capable GPU** with a matching PyTorch version installed.

|

| 69 |

+

* You have run `export PYTHONPATH=$PWD` from the repo's root directory (see Installation).

|

| 70 |

+

|

| 71 |

+

### 1. Run the Quick Start Script

|

| 72 |

+

|

| 73 |

+

Run a demo forecast on a synthetic sine wave. This script will automatically find and load the `models/checkpoint_38M.pth` file included in this repository.

|

| 74 |

+

```bash

|

| 75 |

+

python examples/quick_start_tempo_pfn.py

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

### 2. Run with a Different Checkpoint (Optional)

|

| 79 |

+

|

| 80 |

+

If you have trained your own model, you can point the script to it:

|

| 81 |

+

```bash

|

| 82 |

+

python examples/quick_start_tempo_pfn.py --checkpoint /path/to/your/checkpoint.pth

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

### 3. Run the Notebook version

|

| 86 |

+

```bash

|

| 87 |

+

jupyter notebook examples/quick_start_tempo_pfn.ipynb

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

### Hardware & Performance Tips

|

| 91 |

+

|

| 92 |

+

**GPU Required:** Inference requires a CUDA-capable GPU. Tested on NVIDIA A100/H100.

|

| 93 |

+

|

| 94 |

+

**First Inference May Be Slow:** Initial calls for unseen sequence lengths trigger Triton kernel compilation. Subsequent runs are cached and fast.

|

| 95 |

+

|

| 96 |

+

**Triton Caches:** To prevent slowdowns from writing caches to a network filesystem, route caches to a local directory (like `/tmp`) before running:

|

| 97 |

+

```bash

|

| 98 |

+

LOCAL_CACHE_BASE="${TMPDIR:-/tmp}/tsf-$(date +%s)"

|

| 99 |

+

mkdir -p "${LOCAL_CACHE_BASE}/triton" "${LOCAL_CACHE_BASE}/torchinductor"

|

| 100 |

+

export TRITON_CACHE_DIR="${LOCAL_CACHE_BASE}/triton"

|

| 101 |

+

export TORCHINDUCTOR_CACHE_DIR="${LOCAL_CACHE_BASE}/torchinductor"

|

| 102 |

+

|

| 103 |

+

python examples/quick_start_tempo_pfn.py

|

| 104 |

+

```

|

| 105 |

+

|

| 106 |

+

## 🚂 Training

|

| 107 |

+

|

| 108 |

+

### Single-GPU Training (for debugging)

|

| 109 |

+

```bash

|

| 110 |

+

torchrun --standalone --nproc_per_node=1 src/training/trainer_dist.py --config ./configs/train.yaml

|

| 111 |

+

```

|

| 112 |

+

|

| 113 |

+

### Multi-GPU Training (Single-Node)

|

| 114 |

+

|

| 115 |

+

This example uses 8 GPUs. The training script uses PyTorch DistributedDataParallel (DDP).

|

| 116 |

+

```bash

|

| 117 |

+

torchrun --standalone --nproc_per_node=8 src/training/trainer_dist.py --config ./configs/train.yaml

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

### Configuration

|

| 121 |

+

|

| 122 |

+

All training and model parameters are controlled via YAML files in `configs/` (architecture, optimizers, paths).

|

| 123 |

+

|

| 124 |

+

## 💾 Synthetic Data Generation

|

| 125 |

+

|

| 126 |

+

A core contribution of this work is our open-source synthetic data pipeline, located in `src/synthetic_generation/`. It combines diverse generators with a powerful augmentation cascade.

|

| 127 |

+

|

| 128 |

+

**Generators Used:**

|

| 129 |

+

|

| 130 |

+

* **Adapted Priors:** ForecastPFN, KernelSynth, GaussianProcess (GP), and CauKer (Structural Causal Models).

|

| 131 |

+

* **Novel Priors:** SDE (a flexible regime-switching Ornstein-Uhlenbeck process), Sawtooth, StepFunction, Anomaly, Spikes, SineWave, and Audio-Inspired generators (Stochastic Rhythms, Financial Volatility, Network Topology, Multi-Scale Fractals).

|

| 132 |

+

|

| 133 |

+

You can easily generate your own data by installing the development dependencies and instantiating a generator wrapper. See `examples/generate_synthetic_data.py` for a minimal script, or inspect the generator code in `src/synthetic_generation/`.

|

| 134 |

+

|

| 135 |

+

## 🤝 License

|

| 136 |

+

|

| 137 |

+

This project is licensed under the Apache 2.0 License. See the LICENSE file for details. This permissive license allows for both academic and commercial use.

|

| 138 |

+

|

| 139 |

+

## 📚 Citation

|

| 140 |

+

|

| 141 |

+

If you find TempoPFN useful in your research, please consider citing our paper:

|

| 142 |

+

```bibtex

|

| 143 |

+

@misc{moroshan2025tempopfn,

|

| 144 |

+

title={TempoPFN: Synthetic Pre-training of Linear RNNs for Zero-Shot Time Series Forecasting},

|

| 145 |

+

author={Vladyslav Moroshan and Julien Siems and Arber Zela and Timur Carstensen and Frank Hutter},

|

| 146 |

+

year={2025},

|

| 147 |

+

eprint={2510.25502},

|

| 148 |

+

archivePrefix={arXiv},

|

| 149 |

+

primaryClass={cs.LG}

|

| 150 |

+

}

|

| 151 |

+

```

|

configs/example.yaml

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

train_data_path: null # Replace with the path to root of the training data directory with subdirectories for each generator (e.g. gp, kernel, etc.)

|

| 2 |

+

model_path: ./models # Path where the model will be saved

|

| 3 |

+

model_name: TempoPFN

|

| 4 |

+

continue_training: false

|

| 5 |

+

checkpoint_path: null # Replace with the path to the checkpoint file

|

| 6 |

+

seed: 2025

|

| 7 |

+

wandb: true # whether to log to wandb

|

| 8 |

+

wandb_project_name: TempoPFNTraining

|

| 9 |

+

wandb_entity: university-of-freiburg-2024

|

| 10 |

+

wandb_plots: false

|

| 11 |

+

|

| 12 |

+

batch_size: 40

|

| 13 |

+

num_training_iterations: 1000000 # 1M

|

| 14 |

+

validation_batch_size: 64

|

| 15 |

+

num_validation_batches: 1

|

| 16 |

+

num_workers: 4

|

| 17 |

+

gradient_accumulation_enabled: true

|

| 18 |

+

accumulation_steps: 5 # Number of batches to accumulate before updating (effective batch size = batch_size * accumulation_steps)

|

| 19 |

+

log_interval: 2048

|

| 20 |

+

save_every: 100000

|

| 21 |

+

|

| 22 |

+

generator_proportions:

|

| 23 |

+

forecast_pfn: 1.0

|

| 24 |

+

gp: 1.0

|

| 25 |

+

kernel: 1.0

|

| 26 |

+

sawtooth: 1.0

|

| 27 |

+

sinewave: 1.0

|

| 28 |

+

step: 1.0

|

| 29 |

+

anomaly: 1.0

|

| 30 |

+

spike: 1.0

|

| 31 |

+

cauker_univariate: 1.0

|

| 32 |

+

ou_process: 3.0

|

| 33 |

+

audio_financial_volatility: 0.1

|

| 34 |

+

audio_multi_scale_fractal: 0.1

|

| 35 |

+

audio_network_topology: 0.5

|

| 36 |

+

audio_stochastic_rhythm: 0.5

|

| 37 |

+

augmented_per_sample_2048: 2.0

|

| 38 |

+

augmented_temp_batch_2048: 2.0

|

| 39 |

+

|

| 40 |

+

# Learning Rate Scheduler Configuration

|

| 41 |

+

lr_scheduler: cosine # Options: "warmup_stable_decay", "cosine_with_warmup", "cosine_with_restarts", "cosine"

|

| 42 |

+

|

| 43 |

+

# Learning Rate Parameters

|

| 44 |

+

peak_lr: 0.0002 # 2e-4 - Peak learning rate

|

| 45 |

+

min_lr_ratio: 0.01 # Minimum LR as fraction of peak LR

|

| 46 |

+

|

| 47 |

+

# WSD Scheduler Specific Parameters

|

| 48 |

+

warmup_ratio: 0.003 # 0.3% of total steps for warmup

|

| 49 |

+

stable_ratio: 0.90 # 90% of total steps at stable learning rate

|

| 50 |

+

decay_type: cosine # Type of decay: "cosine" or "linear"

|

| 51 |

+

|

| 52 |

+

# Alternative Scheduler Parameters (if using different schedulers)

|

| 53 |

+

num_cycles: 0.5 # For cosine_with_warmup: 0.5 = half cosine wave

|

| 54 |

+

num_restart_cycles: 4 # For cosine_with_restarts: number of restart cycles

|

| 55 |

+

|

| 56 |

+

# Optimizer Configuration

|

| 57 |

+

weight_decay: 0.01 # Weight decay for AdamW

|

| 58 |

+

beta1: 0.9 # Adam beta1 parameter

|

| 59 |

+

beta2: 0.98 # Adam beta2 parameter (optimized for transformers)

|

| 60 |

+

optimizer_eps: 1e-6 # Adam epsilon

|

| 61 |

+

|

| 62 |

+

# Training Stability

|

| 63 |

+

gradient_clip_val: 100.0

|

| 64 |

+

scaler: custom_robust

|

| 65 |

+

|

| 66 |

+

gift_eval:

|

| 67 |

+

evaluate_on_gift_eval: false

|

| 68 |

+

max_context_length: 3072

|

| 69 |

+

create_plots: false

|

| 70 |

+

max_plots: 5

|

| 71 |

+

dataset_storage_path: null # Replace with the path to the dataset storage path

|

| 72 |

+

|

| 73 |

+

data_augmentation:

|

| 74 |

+

nan_augmentation: true

|

| 75 |

+

scaler_augmentation: false

|

| 76 |

+

length_shortening: true

|

| 77 |

+

nan_stats_path: ./data/nan_stats.json

|

| 78 |

+

|

| 79 |

+

augmentation_probabilities:

|

| 80 |

+

scaler_augmentation: 0.5

|

| 81 |

+

|

| 82 |

+

TimeSeriesModel:

|

| 83 |

+

# Core architecture

|

| 84 |

+

embed_size: 512

|

| 85 |

+

num_encoder_layers: 10

|

| 86 |

+

|

| 87 |

+

# Scaling and preprocessing

|

| 88 |

+

scaler: custom_robust

|

| 89 |

+

epsilon: 0.00001

|

| 90 |

+

scaler_clamp_value: null

|

| 91 |

+

handle_constants: false

|

| 92 |

+

|

| 93 |

+

# Time features

|

| 94 |

+

K_max: 25

|

| 95 |

+

time_feature_config:

|

| 96 |

+

use_enhanced_features: true

|

| 97 |

+

use_holiday_features: false

|

| 98 |

+

use_index_features: true

|

| 99 |

+

include_seasonality_info: true

|

| 100 |

+

|

| 101 |

+

drop_enc_allow: false

|

| 102 |

+

encoding_dropout: 0.0

|

| 103 |

+

|

| 104 |

+

# Encoder configuration

|

| 105 |

+

encoder_config:

|

| 106 |

+

attn_mode: chunk

|

| 107 |

+

num_heads: 4

|

| 108 |

+

expand_v: 1.0

|

| 109 |

+

use_short_conv: true

|

| 110 |

+

conv_size: 32

|

| 111 |

+

allow_neg_eigval: true

|

| 112 |

+

hidden_ratio: 1.0

|

| 113 |

+

use_gate: true

|

| 114 |

+

use_forget_gate: true

|

| 115 |

+

num_householder: 4

|

| 116 |

+

weaving: true

|

| 117 |

+

|

| 118 |

+

loss_type: 'quantile'

|

| 119 |

+

quantiles: [0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

|

data/dataset_properties.json

ADDED

|

@@ -0,0 +1,152 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"m4_yearly": {

|

| 3 |

+

"domain": "Econ/Fin",

|

| 4 |

+

"frequency": "A",

|

| 5 |

+

"num_variates": 1

|

| 6 |

+

},

|

| 7 |

+

"m4_quarterly": {

|

| 8 |

+

"domain": "Econ/Fin",

|

| 9 |

+

"frequency": "Q",

|

| 10 |

+

"num_variates": 1

|

| 11 |

+

},

|

| 12 |

+

"m4_monthly": {

|

| 13 |

+

"domain": "Econ/Fin",

|

| 14 |

+

"frequency": "M",

|

| 15 |

+

"num_variates": 1

|

| 16 |

+

},

|

| 17 |

+

"m4_weekly": {

|

| 18 |

+

"domain": "Econ/Fin",

|

| 19 |

+

"frequency": "W",

|

| 20 |

+

"num_variates": 1

|

| 21 |

+

},

|

| 22 |

+

"m4_daily": {

|

| 23 |

+

"domain": "Econ/Fin",

|

| 24 |

+

"frequency": "D",

|

| 25 |

+

"num_variates": 1

|

| 26 |

+

},

|

| 27 |

+

"m4_hourly": {

|

| 28 |

+

"domain": "Econ/Fin",

|

| 29 |

+

"frequency": "H",

|

| 30 |

+

"num_variates": 1

|

| 31 |

+

},

|

| 32 |

+

"electricity": {

|

| 33 |

+

"domain": "Energy",

|

| 34 |

+

"frequency": "W",

|

| 35 |

+

"num_variates": 1

|

| 36 |

+

},

|

| 37 |

+

"ett1": {

|

| 38 |

+

"domain": "Energy",

|

| 39 |

+

"frequency": "W",

|

| 40 |

+

"num_variates": 7

|

| 41 |

+

},

|

| 42 |

+

"ett2": {

|

| 43 |

+

"domain": "Energy",

|

| 44 |

+

"frequency": "W",

|

| 45 |

+

"num_variates": 7

|

| 46 |

+

},

|

| 47 |

+

"solar": {

|

| 48 |

+

"domain": "Energy",

|

| 49 |

+

"frequency": "W",

|

| 50 |

+

"num_variates": 1

|

| 51 |

+

},

|

| 52 |

+

"hospital": {

|

| 53 |

+

"domain": "Healthcare",

|

| 54 |

+

"frequency": "M",

|

| 55 |

+

"num_variates": 1

|

| 56 |

+

},

|

| 57 |

+

"covid_deaths": {

|

| 58 |

+

"domain": "Healthcare",

|

| 59 |

+

"frequency": "D",

|

| 60 |

+

"num_variates": 1

|

| 61 |

+

},

|

| 62 |

+

"us_births": {

|

| 63 |

+

"domain": "Healthcare",

|

| 64 |

+

"frequency": "M",

|

| 65 |

+

"num_variates": 1

|

| 66 |

+

},

|

| 67 |

+

"saugeen": {

|

| 68 |

+

"domain": "Nature",

|

| 69 |

+

"frequency": "M",

|

| 70 |

+

"num_variates": 1

|

| 71 |

+

},

|

| 72 |

+

"temperature_rain": {

|

| 73 |

+

"domain": "Nature",

|

| 74 |

+

"frequency": "D",

|

| 75 |

+

"num_variates": 1

|

| 76 |

+

},

|

| 77 |

+

"kdd_cup_2018": {

|

| 78 |

+

"domain": "Nature",

|

| 79 |

+

"frequency": "D",

|

| 80 |

+

"num_variates": 1

|

| 81 |

+

},

|

| 82 |

+

"jena_weather": {

|

| 83 |

+

"domain": "Nature",

|

| 84 |

+

"frequency": "D",

|

| 85 |

+

"num_variates": 21

|

| 86 |

+

},

|

| 87 |

+

"car_parts": {

|

| 88 |

+

"domain": "Sales",

|

| 89 |

+

"frequency": "M",

|

| 90 |

+

"num_variates": 1

|

| 91 |

+

},

|

| 92 |

+

"restaurant": {

|

| 93 |

+

"domain": "Sales",

|

| 94 |

+

"frequency": "D",

|

| 95 |

+

"num_variates": 1

|

| 96 |

+

},

|

| 97 |

+

"hierarchical_sales": {

|

| 98 |

+

"domain": "Sales",

|

| 99 |

+

"frequency": "W-WED",

|

| 100 |

+

"num_variates": 1

|

| 101 |

+

},

|

| 102 |

+

"loop_seattle": {

|

| 103 |

+

"domain": "Transport",

|

| 104 |

+

"frequency": "D",

|

| 105 |

+

"num_variates": 1

|

| 106 |

+

},

|

| 107 |

+

"sz_taxi": {

|

| 108 |

+

"domain": "Transport",

|

| 109 |

+

"frequency": "H",

|

| 110 |

+

"num_variates": 1

|

| 111 |

+

},

|

| 112 |

+

"m_dense": {

|

| 113 |

+

"domain": "Transport",

|

| 114 |

+

"frequency": "D",

|

| 115 |

+

"num_variates": 1

|

| 116 |

+

},

|

| 117 |

+

"bitbrains_fast_storage": {

|

| 118 |

+

"domain": "Web/CloudOps",

|

| 119 |

+

"frequency": "H",

|

| 120 |

+

"num_variates": 2

|

| 121 |

+

},

|

| 122 |

+

"bitbrains_rnd": {

|

| 123 |

+

"domain": "Web/CloudOps",

|

| 124 |

+

"frequency": "H",

|

| 125 |

+

"num_variates": 2

|

| 126 |

+

},

|

| 127 |

+

"bizitobs_application": {

|

| 128 |

+

"domain": "Web/CloudOps",

|

| 129 |

+

"frequency": "10S",

|

| 130 |

+

"num_variates": 2

|

| 131 |

+

},

|

| 132 |

+

"bizitobs_service": {

|

| 133 |

+

"domain": "Web/CloudOps",

|

| 134 |

+

"frequency": "10S",

|

| 135 |

+

"num_variates": 2

|

| 136 |

+

},

|

| 137 |

+

"bizitobs_l2c": {

|

| 138 |

+

"domain": "Web/CloudOps",

|

| 139 |

+

"frequency": "H",

|

| 140 |

+

"num_variates": 7

|

| 141 |

+

},

|

| 142 |

+

"dd_benchmark_short": {

|

| 143 |

+

"domain": "Web/Observability",

|

| 144 |

+

"frequency": "Short",

|

| 145 |

+

"num_variates": 32

|

| 146 |

+

},

|

| 147 |

+

"dd_benchmark_long": {

|

| 148 |

+

"domain": "Web/Observability",

|

| 149 |

+

"frequency": "Long",

|

| 150 |

+

"num_variates": 32

|

| 151 |

+

}

|

| 152 |

+

}

|

data/nan_stats.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/generate_synthetic_data.py

ADDED

|

@@ -0,0 +1,204 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

import os

|

| 3 |

+

from typing import List, Optional

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

|

| 7 |

+

from src.data.containers import BatchTimeSeriesContainer

|

| 8 |

+

from src.data.utils import sample_future_length

|

| 9 |

+

from src.plotting.plot_timeseries import plot_from_container

|

| 10 |

+

from src.synthetic_generation.anomalies.anomaly_generator_wrapper import (

|

| 11 |

+

AnomalyGeneratorWrapper,

|

| 12 |

+

)

|

| 13 |

+

from src.synthetic_generation.cauker.cauker_generator_wrapper import (

|

| 14 |

+

CauKerGeneratorWrapper,

|

| 15 |

+

)

|

| 16 |

+

from src.synthetic_generation.forecast_pfn_prior.forecast_pfn_generator_wrapper import (

|

| 17 |

+

ForecastPFNGeneratorWrapper,

|

| 18 |

+

)

|

| 19 |

+

from src.synthetic_generation.generator_params import (

|

| 20 |

+

AnomalyGeneratorParams,

|

| 21 |

+

CauKerGeneratorParams,

|

| 22 |

+

FinancialVolatilityAudioParams,

|

| 23 |

+

ForecastPFNGeneratorParams,

|

| 24 |

+

GPGeneratorParams,

|

| 25 |

+

KernelGeneratorParams,

|

| 26 |

+

MultiScaleFractalAudioParams,

|

| 27 |

+

NetworkTopologyAudioParams,

|

| 28 |

+

OrnsteinUhlenbeckProcessGeneratorParams,

|

| 29 |

+

SawToothGeneratorParams,

|

| 30 |

+

SineWaveGeneratorParams,

|

| 31 |

+

SpikesGeneratorParams,

|

| 32 |

+

StepGeneratorParams,

|

| 33 |

+

StochasticRhythmAudioParams,

|

| 34 |

+

)

|

| 35 |

+

from src.synthetic_generation.gp_prior.gp_generator_wrapper import GPGeneratorWrapper

|

| 36 |

+

from src.synthetic_generation.kernel_synth.kernel_generator_wrapper import (

|

| 37 |

+

KernelGeneratorWrapper,

|

| 38 |

+

)

|

| 39 |

+

from src.synthetic_generation.ornstein_uhlenbeck_process.ou_generator_wrapper import (

|

| 40 |

+

OrnsteinUhlenbeckProcessGeneratorWrapper,

|

| 41 |

+

)

|

| 42 |

+

from src.synthetic_generation.sawtooth.sawtooth_generator_wrapper import (

|

| 43 |

+

SawToothGeneratorWrapper,

|

| 44 |

+

)

|

| 45 |

+

from src.synthetic_generation.sine_waves.sine_wave_generator_wrapper import (

|

| 46 |

+

SineWaveGeneratorWrapper,

|

| 47 |

+

)

|

| 48 |

+

from src.synthetic_generation.spikes.spikes_generator_wrapper import (

|

| 49 |

+

SpikesGeneratorWrapper,

|

| 50 |

+

)

|

| 51 |

+

from src.synthetic_generation.steps.step_generator_wrapper import StepGeneratorWrapper

|

| 52 |

+

|

| 53 |

+

PYO_AVAILABLE = True

|

| 54 |

+

try:

|

| 55 |

+

import pyo # requires portaudio to be installed

|

| 56 |

+

except (ImportError, OSError):

|

| 57 |

+

PYO_AVAILABLE = False

|

| 58 |

+

else:

|

| 59 |

+

from src.synthetic_generation.audio_generators.financial_volatility_wrapper import (

|

| 60 |

+

FinancialVolatilityAudioWrapper,

|

| 61 |

+

)

|

| 62 |

+

from src.synthetic_generation.audio_generators.multi_scale_fractal_wrapper import (

|

| 63 |

+

MultiScaleFractalAudioWrapper,

|

| 64 |

+

)

|

| 65 |

+

from src.synthetic_generation.audio_generators.network_topology_wrapper import (

|

| 66 |

+

NetworkTopologyAudioWrapper,

|

| 67 |

+

)

|

| 68 |

+

from src.synthetic_generation.audio_generators.stochastic_rhythm_wrapper import (

|

| 69 |

+

StochasticRhythmAudioWrapper,

|

| 70 |

+

)

|

| 71 |

+

|

| 72 |

+

logging.basicConfig(

|

| 73 |

+

level=logging.INFO, format="%(asctime)s - %(levelname)s - %(message)s"

|

| 74 |

+

)

|

| 75 |

+

logger = logging.getLogger(__name__)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def visualize_batch_sample(

|

| 79 |

+

generator,

|

| 80 |

+

batch_size: int = 8,

|

| 81 |

+

output_dir: str = "outputs/plots",

|

| 82 |

+

sample_idx: Optional[int] = None,

|

| 83 |

+

prefix: str = "",

|

| 84 |

+

seed: Optional[int] = None,

|

| 85 |

+

) -> None:

|

| 86 |

+

os.makedirs(output_dir, exist_ok=True)

|

| 87 |

+

name = generator.__class__.__name__

|

| 88 |

+

logger.info(f"[{name}] Generating batch of size {batch_size}")

|

| 89 |

+

|

| 90 |

+

batch = generator.generate_batch(batch_size=batch_size, seed=seed)

|

| 91 |

+

values = torch.from_numpy(batch.values)

|

| 92 |

+

if values.ndim == 2:

|

| 93 |

+

values = values.unsqueeze(-1)

|

| 94 |

+

|

| 95 |

+

future_length = sample_future_length(range="gift_eval")

|

| 96 |

+

history_values = values[:, :-future_length, :]

|

| 97 |

+

future_values = values[:, -future_length:, :]

|

| 98 |

+

|

| 99 |

+

container = BatchTimeSeriesContainer(

|

| 100 |

+

history_values=history_values,

|

| 101 |

+

future_values=future_values,

|

| 102 |

+

start=batch.start,

|

| 103 |

+

frequency=batch.frequency,

|

| 104 |

+

)

|

| 105 |

+

|

| 106 |

+

indices = [sample_idx] if sample_idx is not None else range(batch_size)

|

| 107 |

+

for i in indices:

|

| 108 |

+

filename = (

|

| 109 |

+

f"{prefix}_{name.lower().replace('generatorwrapper', '')}_sample_{i}.png"

|

| 110 |

+

)

|

| 111 |

+

output_file = os.path.join(output_dir, filename)

|

| 112 |

+

title = f"{prefix.capitalize()} {name.replace('GeneratorWrapper', '')} Synthetic Series (Sample {i})"

|

| 113 |

+

plot_from_container(

|

| 114 |

+

container, sample_idx=i, output_file=output_file, show=False, title=title

|

| 115 |

+

)

|

| 116 |

+

logger.info(f"[{name}] Saved plot to {output_file}")

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

def generator_factory(global_seed: int, total_length: int) -> List:

|

| 120 |

+

generators = [

|

| 121 |

+

KernelGeneratorWrapper(

|

| 122 |

+

KernelGeneratorParams(global_seed=global_seed, length=total_length)

|

| 123 |

+

),

|

| 124 |

+

GPGeneratorWrapper(

|

| 125 |

+

GPGeneratorParams(global_seed=global_seed, length=total_length)

|

| 126 |

+

),

|

| 127 |

+

ForecastPFNGeneratorWrapper(

|

| 128 |

+

ForecastPFNGeneratorParams(global_seed=global_seed, length=total_length)

|

| 129 |

+

),

|

| 130 |

+